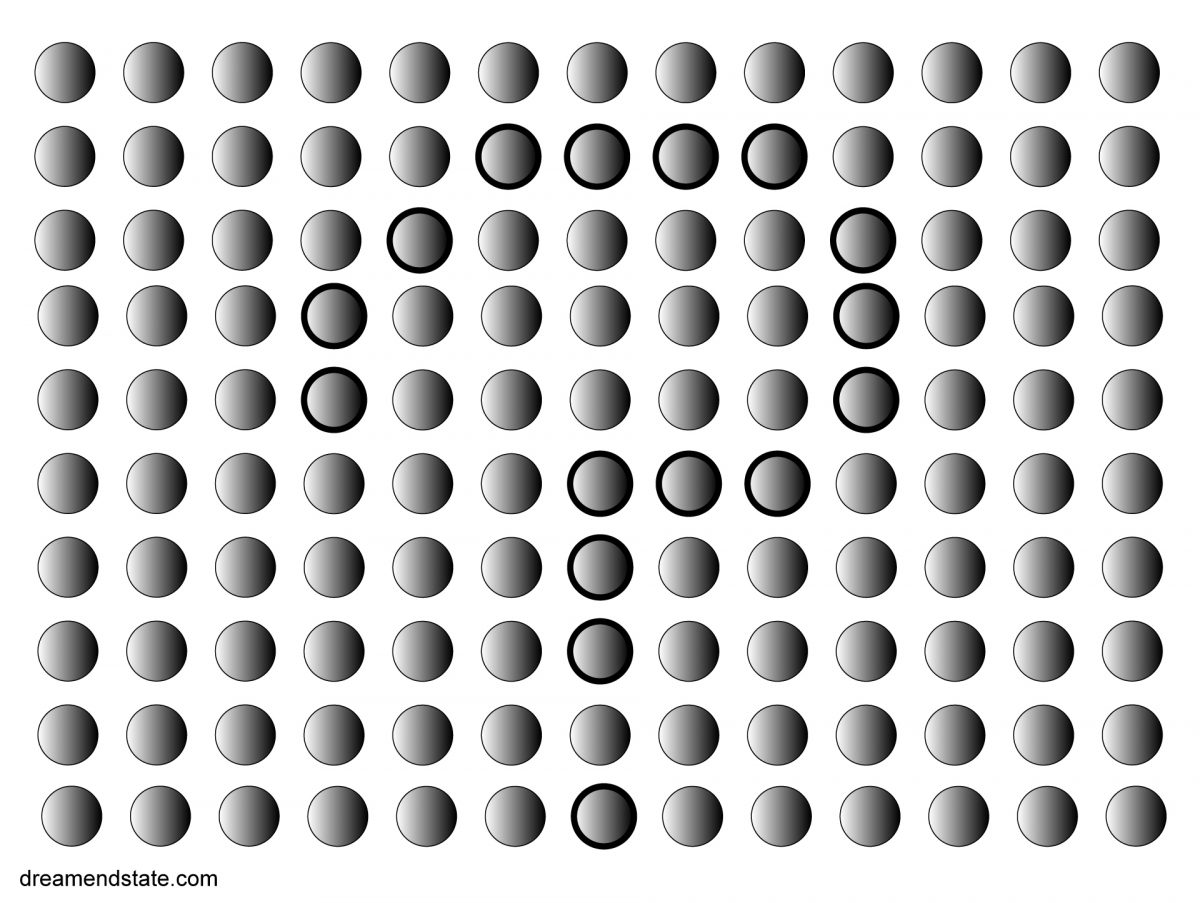

The mind tricks us into seeing patterns when there are none. This causes all kinds of problems.

According to Nassim Taleb, people routinely mistake luck for skill, randomness for determination, and misinformation for fact. These are the factors that cause us to process information incorrectly, and distort our views of the world:

All-or-nothing view

We tend to see events as all-or-nothing, rather than shades of probability. If you see a successful business executive, they’ll tell you that the reason for their success is some combination of hard work and talent. But what if you have thousands of successful business executives? Probability tells us that some of them must be successful only through sheer luck. But none of them will admit to that.

Affirming the consequent

Our brains slip up in trying to find patterns to explain things. Studies show that most successful business leaders are risk-takers. Does this mean that most risk-takers become successful? Ask those whose risks failed.

Hindsight bias

We also ignore probability when it comes to past events. When we look back at things that have happened, it seems like they were inevitable. Just listen to any political pundit explain why a shock election result was obviously going to happen. This means we ignore the effect of random chance, instead preferring to rationalise why something happened.

Survivorship bias

If we only see the survivors of an event, our brains assume everyone survived. Or at least, the survival rate is good. Take the view that stock markets just go up in the long run — imagine how rich you’d be today if you’d invested in the stock market in 1900?

But this view is based on the stock markets and companies that have survived to this day. If you really did invest in stocks in 1900, you probably would’ve bought into the developed markets of Imperial Russia or Argentina rather than the emerging United States, and most of the companies you bought into would have failed.

Mistaking a one-off for the whole

Similar to taking an all-or-nothing view, Taleb warns us against conflating details with the ensemble. This is particularly acute when you’re facing nefarious agents that are spreading disinformation (information that’s deliberately spread to deceive) or unwitting redistributors of misinformation (incorrect or misleading information presented as fact). For instance, in saying there are Neo-Nazis in Ukraine — how relevant is it to the whole? what is the proportion? There are unfortunately Neo-Nazis in most large countries, including in Russia.

Okay the problem is you take something called a ‘detail’, say an anecdote, single random event, and by emphasizing it, making you think that that detail represents the ensemble. It does not, and we are much more vulnerable to details, the salient details. Something psychologists like Danny Kahneman called the representativeness heuristic.

Nassim Nicholas Taleb, Disinformation and Fooled by Randomness

In short: an anecdote falls far short of conclusive evidence.

See also Brandolini’s Law or the bullshit asymmetry principle that states that the effort to refute bullshit is an order of magnitude larger than is needed to produce it.

Watch out for

All of these combine to make us ignore the possibility of rare, disastrous events like a huge market crash. It’s hard for us to comprehend something that’s never happened before. So we discard the possibility, in the same way statisticians might discard outlying results to avoid skewing an average. This is a mistake and leads to paralysis when rare events do happen.

History teaches us that things that never happened before do happen.

Nassim Nicholas Taleb, Fooled by Randomness

With thanks to Ivan Edwards who wrote most of this post. Thanks Ivan!

Resources

Gladwell, Malcolm (April 2002), Blowing Up, The New Yorker

Taleb, Nassim Nicholas (2001), Fooled by Randomness: The Hidden Role of Chance in Life and in the Markets, Penguin